训练跑一半显卡掉卡,服务器nvidia-smi报错:

Unable to determine the device handle for GPU 0000:02:00.0: Unknown Error

1、使用sudo nvidia-bug-report.sh查看问题log

跑训练时同时运行nvidia-bug-report.sh同步显卡状态和bug信息,从中查找到问题:

Xid (…): 79, pid='<unknown>', name=<unknown>, GPU has fallen off the bus

参考ISSUE大佬提到:

One of the gpus is shutting down. Since it’s not always the same one, I guess they’re not damaged but either overheating or lack of power occurs. Please monitor temperatures, check PSU.

大概率是过热、缺电导致的

2、解决方案汇总

(1)显卡温度墙

跑训练时同时运行以下命令,监控显卡温度变化 nvidia-smi -q -l 2 -d TEMPERATURE -f nvidiatemp.log

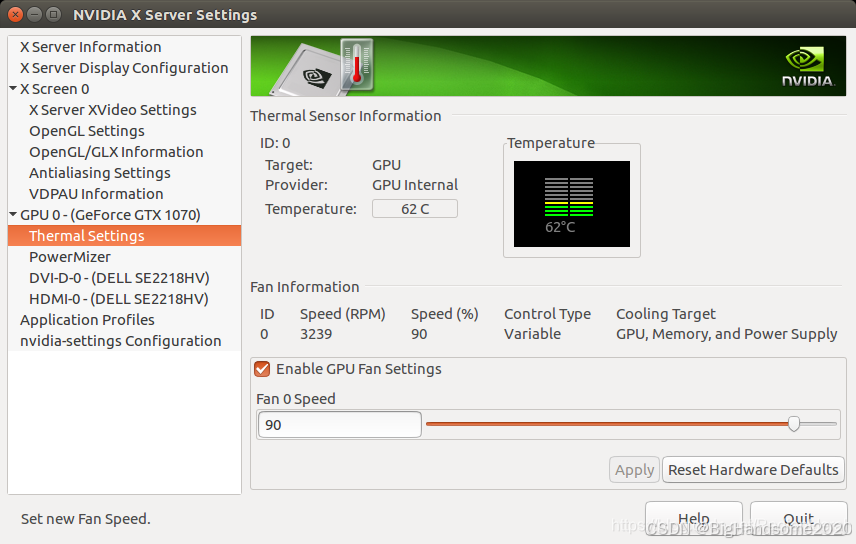

上图中CurrentTemp表示当前温度,Shutdown Temp表示超过这个温度GPU会自动掉线。Target Temp表示目标温度(GPU比较合适的温度)。

上图中CurrentTemp表示当前温度,Shutdown Temp表示超过这个温度GPU会自动掉线。Target Temp表示目标温度(GPU比较合适的温度)。

如果有过热情况,需要检查显卡风扇是否正常,使用nvidia-settings设置好风扇转速最大试试

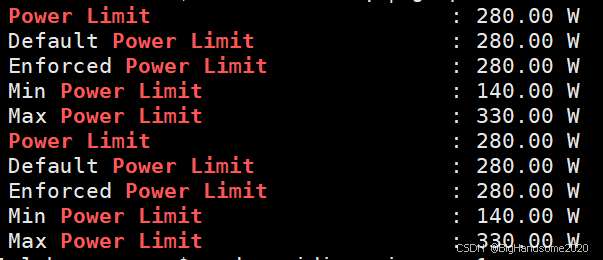

(2)显卡功耗墙

题主双卡1080TI只用了850W的电源(怀疑不够),设置了一下功耗墙: sudo vim /etc/systemd/system/nvidia-power-limit.service

以下内容写入文件

[Unit]

Description=Set NVIDIA GPU Power Limit

[Service]

Type=oneshot

ExecStart=/usr/bin/nvidia-smi -pl 200

[Install]

WantedBy=multi-user.target

启用服务在启动时自动应用功率限制: sudo systemctl enable nvidia-power-limit.service

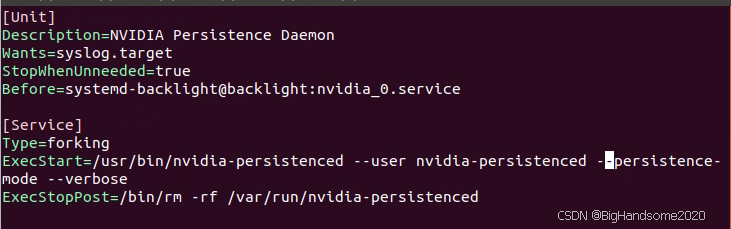

(3)显卡设置双卡持久模式,保证GPU驱动即使没有东西在运行也是处于加载状态,避免驱动频繁加载 PS:GPU默认持久模式关闭的时候,如果负载低会休眠,之后唤起有一定几率失败。nvidia-smi -pm 1命令可以使GPU一直保持准备工作的状态,跑训练前可调用。

开机自动开持久模式的设置方式: sudo vim /lib/systemd/system/nvidia-persistenced.service

Change

ExecStart=/usr/bin/nvidia-persistenced –user nvidia-persistenced –no-persistence-mode –verbose

To:

ExecStart=/usr/bin/nvidia-persistenced –user nvidia-persistenced –persistence-mode –verbose

(4)更新内核驱动、nvidia驱动及所有可用的更新; (5)关机,清灰,重新插拔显卡数据线(接触不良、PCI总线问题); (6)如果上面的方式不管用,拔掉一张显卡,仅保留一张;仅保留另一张(某一张显卡坏了); (7)更换电源和电源线(功率、电源线老化,确保电源连接稳定,上双电源,确保电源功率满足多卡使用); (8)更换主板(主板硬件问题)。

PS:基本上是前三个原因导致的,也可能是电源/电源线问题,题主测试了(1)-(4)后就不会报这个错掉卡了,其他原因可以先测完这四个没问题再考虑

参考文章:

https://blog.csdn.net/weixin_42792088/article/details/134176781 https://blog.csdn.net/qq_44850917/article/details/135431204 https://blog.csdn.net/qq_54478153/article/details/128202846 https://zhuanlan.zhihu.com/p/375331159 https://forums.developer.nvidia.com/t/unable-to-determine-the-device-handle-for-gpu-000000-0-unknown-error/244860 https://forums.developer.nvidia.com/t/gpu-fans-go-to-max-and-graphics-drivers-hang/222069/4 https://blog.dreamforme.top/2023/11/03/%E8%A7%A3%E5%86%B3-Unable-to-determine-the-device-handle-for-GPU-Unknown-Error-%E9%97%AE%E9%A2%98/ https://forums.developer.nvidia.com/t/setting-up-nvidia-persistenced/47986/10 https://cn.linux-console.net/?p=31021 https://blog.csdn.net/RadiantJeral/article/details/104479985

网硕互联帮助中心

网硕互联帮助中心

评论前必须登录!

注册